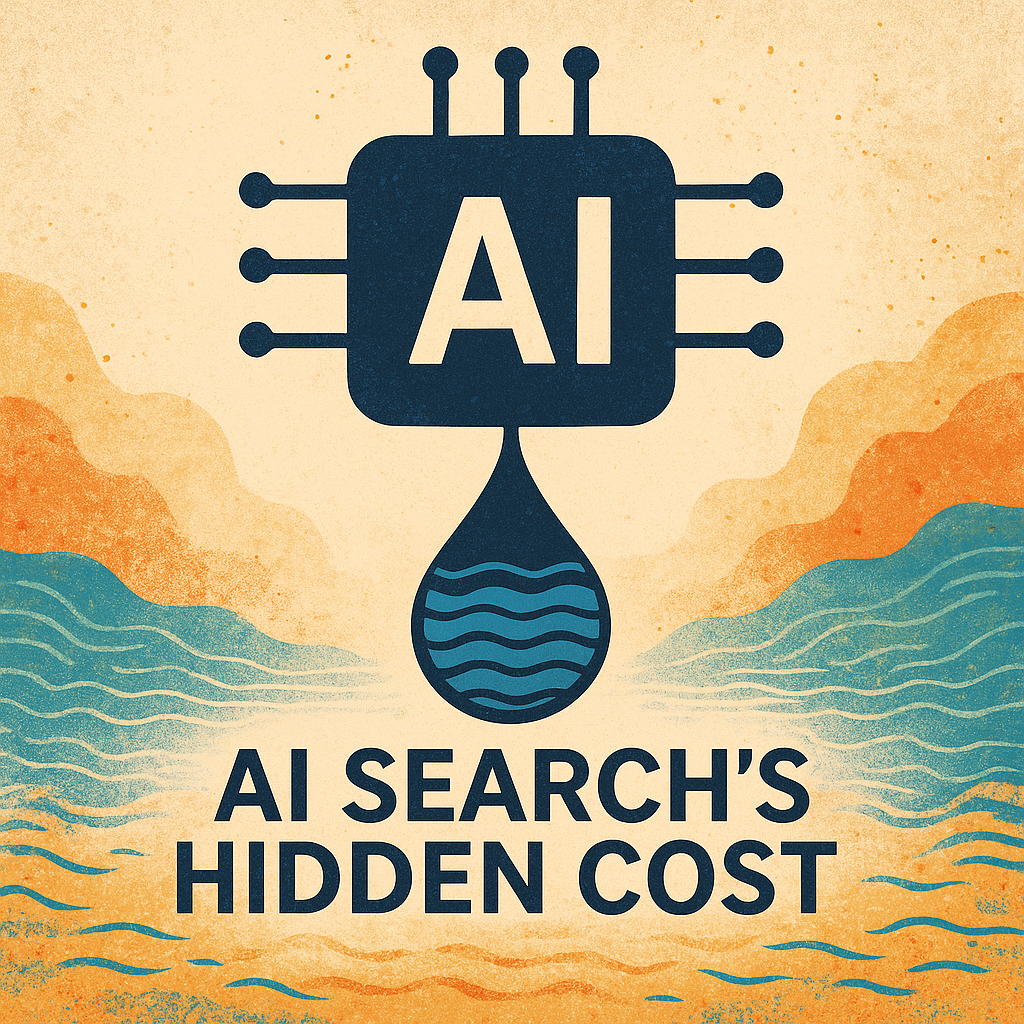

Every time we type a question into an AI-powered search engine, it feels almost magical — instant answers, effortless intelligence, infinite access. But behind that convenience lies an uncomfortable truth: each digital query consumes not just electricity, but also water — a lot of it.

As artificial intelligence reshapes the way we search, learn, and create, it is also quietly rewriting the planet’s environmental balance sheet.

Every Question Has a Physical Cost

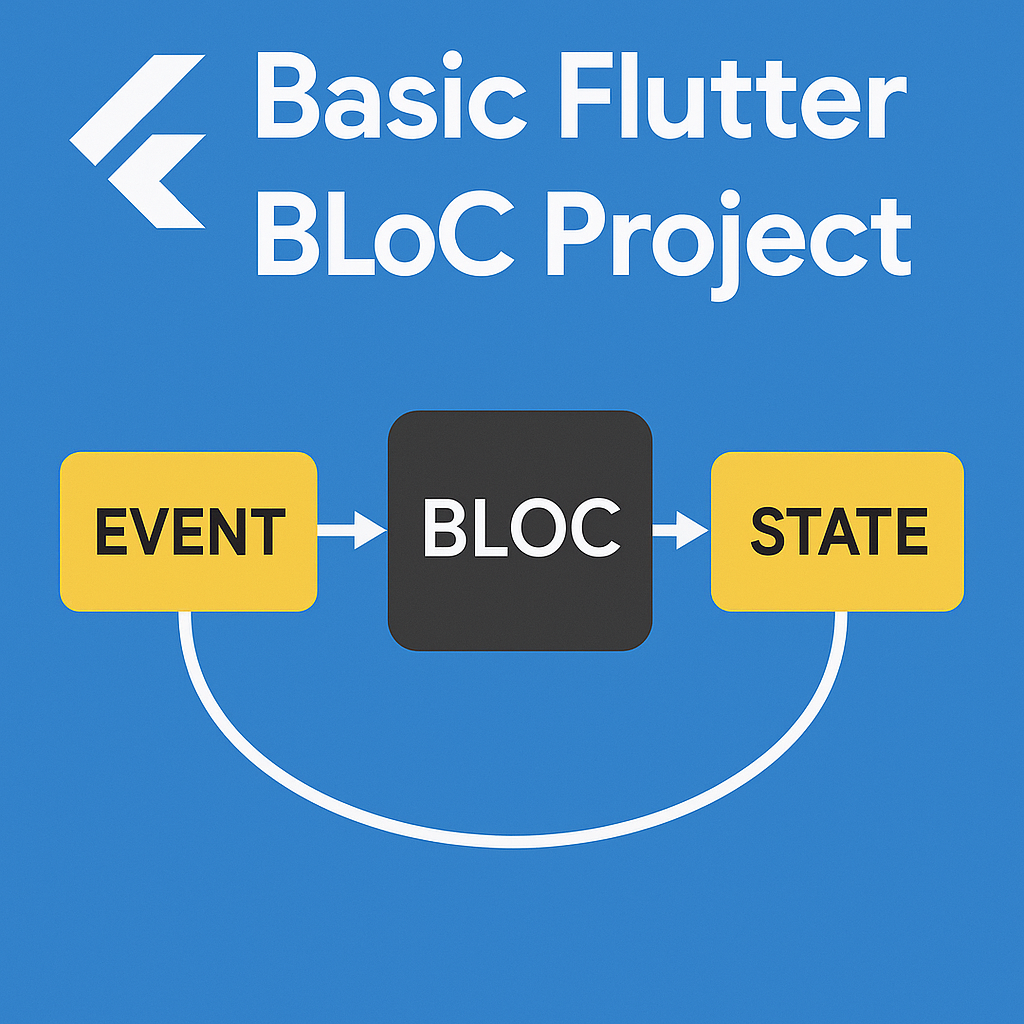

Modern AI systems, especially large language models used in search, rely on massive data centers filled with processors running day and night. These machines generate enormous heat, which must be constantly cooled to avoid system failure.

That cooling process — often achieved through water-based systems — means that every AI-generated answer comes with a hidden water footprint.

Some estimates suggest that generating just a few hundred words of AI text can indirectly consume half a liter of fresh water when considering the water needed to cool the servers processing it. Scaled to millions of daily queries, that translates into millions of liters of water used every single day to satisfy our digital curiosity.

The Growing Energy Appetite of AI

AI doesn’t just sip electricity — it devours it.

According to the International Energy Agency (IEA), global data centers are projected to consume up to 945 terawatt-hours of electricity per year by 2030, roughly 3% of total global power use. AI systems are a major driver of that growth, with energy demand from “accelerated computing” — the GPUs that power AI — growing at 30% per year.

Compared to traditional search, an AI-augmented query can require 60–70 times more energy, because it involves running a complex neural network that predicts, constructs, and refines sentences in real time.

This surge in energy use not only raises carbon emissions but also multiplies water consumption for cooling.

Why Water Matters in the Digital Age

The servers running AI models must stay cool to function. The most common cooling methods — evaporative and liquid cooling systems — rely heavily on water.

-

Large data centers can use 500,000 gallons (≈2 million liters) of water per day for cooling.

-

Across the U.S., total data center water consumption jumped from 21 billion liters in 2014 to 66 billion liters in 2023.

-

In many parts of the world, these centers are built in water-stressed regions, intensifying local scarcity.

Even the power plants generating electricity for data centers use water — meaning the true “water cost” of AI is much higher than the direct cooling figures suggest.

In short: the smarter our machines get, the thirstier they become.

From Virtual to Physical: The Reality of AI Search

The term “cloud” suggests something weightless, invisible, and harmless. But the cloud has a body — and it’s made of metal, silicon, and water.

When an AI model generates an answer, it triggers thousands of physical operations inside servers. Those computations translate into real-world energy and heat, which then demand real-world water and electricity to sustain them.

As AI search becomes the default way of finding information, the environmental impact scales exponentially. Billions of AI queries per day could mean billions of liters of water evaporated — a cost that’s invisible to users but devastating in areas already facing droughts and water shortages.

The Sustainability Challenge

AI’s environmental footprint is not just about scale — it’s about imbalance. The very regions attracting large data centers for connectivity or tax benefits are often those with fragile ecosystems and limited water availability.

If left unchecked, this imbalance could lead to a future where digital progress competes directly with human and ecological needs for water.

Can AI Become Sustainable?

Thankfully, the same intelligence that drives AI can help fix its footprint. Several paths are already emerging:

1. Smarter Cooling Systems

Newer designs use closed-loop liquid cooling that recycles fluids instead of evaporating them. Some centers now use seawater, greywater, or chilled air to reduce freshwater demand.

2. Renewable Power Integration

Powering AI infrastructure with solar, wind, or hydro energy reduces the carbon and indirect water footprint from electricity generation.

3. Efficient Model Design

AI researchers are creating smaller, optimized models that deliver similar accuracy using less computation — and therefore, less energy and cooling.

4. Transparent Metrics

Encouraging companies to report Power Usage Effectiveness (PUE) and Water Usage Effectiveness (WUE) can drive accountability and innovation in resource efficiency.

5. Responsible Infrastructure Planning

Governments and corporations can collaborate to locate data centers in cooler climates or water-abundant regions, minimizing environmental strain.

The Digital Dilemma

AI is not inherently unsustainable — but its growth model currently is. The faster we demand answers, generate images, or process searches, the faster we deplete the very systems that keep those services alive.

Our digital habits now have physical consequences. Every “smart” answer from an AI system is powered by electricity, cooled by water, and supported by an ecosystem that doesn’t regenerate overnight.

A Smarter Kind of Intelligence

The ultimate goal shouldn’t be just Artificial Intelligence — it should be Sustainable Intelligence.

We need to redefine progress not as faster computation, but as responsible computation — innovation that respects planetary limits.

As AI search becomes part of everyday life, remembering its real-world cost is the first step toward responsible digital behavior. Because the question isn’t only “What does AI know?” — it’s also “What does AI consume?”

The answers may shape not only our future of information but also our future of water, energy, and life itself.